#include <PerceptronLayer.h>

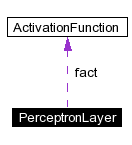

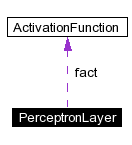

Collaboration diagram for PerceptronLayer:

Public Member Functions | |

| PerceptronLayer (unsigned int neuron_count, const ActivationFunction *fact) | |

| ~PerceptronLayer (void) | |

| PerceptronLayer (PerceptronLayer &source) | |

| void | randomizeParameters (PerceptronLayer *succ, const RandomFunction *weight_func, const RandomFunction *theta_func) |

| void | resetDiffs (void) |

| void | setActivationFunction (const ActivationFunction *fact) |

| const ActivationFunction * | getActivationFunction (void) const |

| void | propagate (PerceptronLayer *pred) |

| void | backpropagate (PerceptronLayer *succ, vector< double > &output_optimal, double opt_tolerance) |

| void | postprocess (PerceptronLayer *succ, double epsilon, double weight_decay, double momterm) |

| void | update (void) |

Public Attributes | |

| PerceptronLayerType | type |

| vector< PerceptronNeuron * > | neurons |

Protected Attributes | |

| const ActivationFunction * | fact |

Most algorithm related calculation is done at the layer level.

|

||||||||||||

|

PerceptronLayer constructor, creating a layer with neuron_count number of neurons.

|

|

|

PerceptronLayer destructor, mainly removing all the subneurons within this layer. |

|

|

Copy constructor.

|

|

||||||||||||||||

|

Backpropagation algorithm. Compute the delta value for all neurons within this layer.

|

|

|

Getter for the activation function. |

|

||||||||||||||||||||

|

Postprocess algorithm for a single layer.

|

|

|

Forward propagation algorithm. The input and output signals of this layer is calculated from the output signals of the pred layer.

|

|

||||||||||||||||

|

Network randomization functions. Randomize variable network parameters: weightings and theta values. Use individual functions for greater customizeability.

|

|

|

Reset functions for learned differences. This function resets all parameters that are learned by the backpropagation and postprocess algorithms. It has to be called after an update has been made. This way, both online- and batch-learning can be implemented. |

|

|

Setter for the activation function.

|

|

|

Update algorithm for a single layer. |

|

|

Activation function and its derivate. Used for both propagation and backpropagation. Must be set and can be different for each layer. |

|

|

Every layer contains at least one neuron. This is the list of neurons within this layer. |

|

|

Type of the layer within the network. The input layer is always the first, the output layer the last layer in the network. Every other layer must be a hidden layer. |

1.3-rc3

1.3-rc3